@KeonsinKryte • Mauricio Botero

UX Designer | Researcher

Let’s talk

← Go back

/

@UsariaDesign / Meta

🛡️

Evaluating new security features for Facebook.

Meta

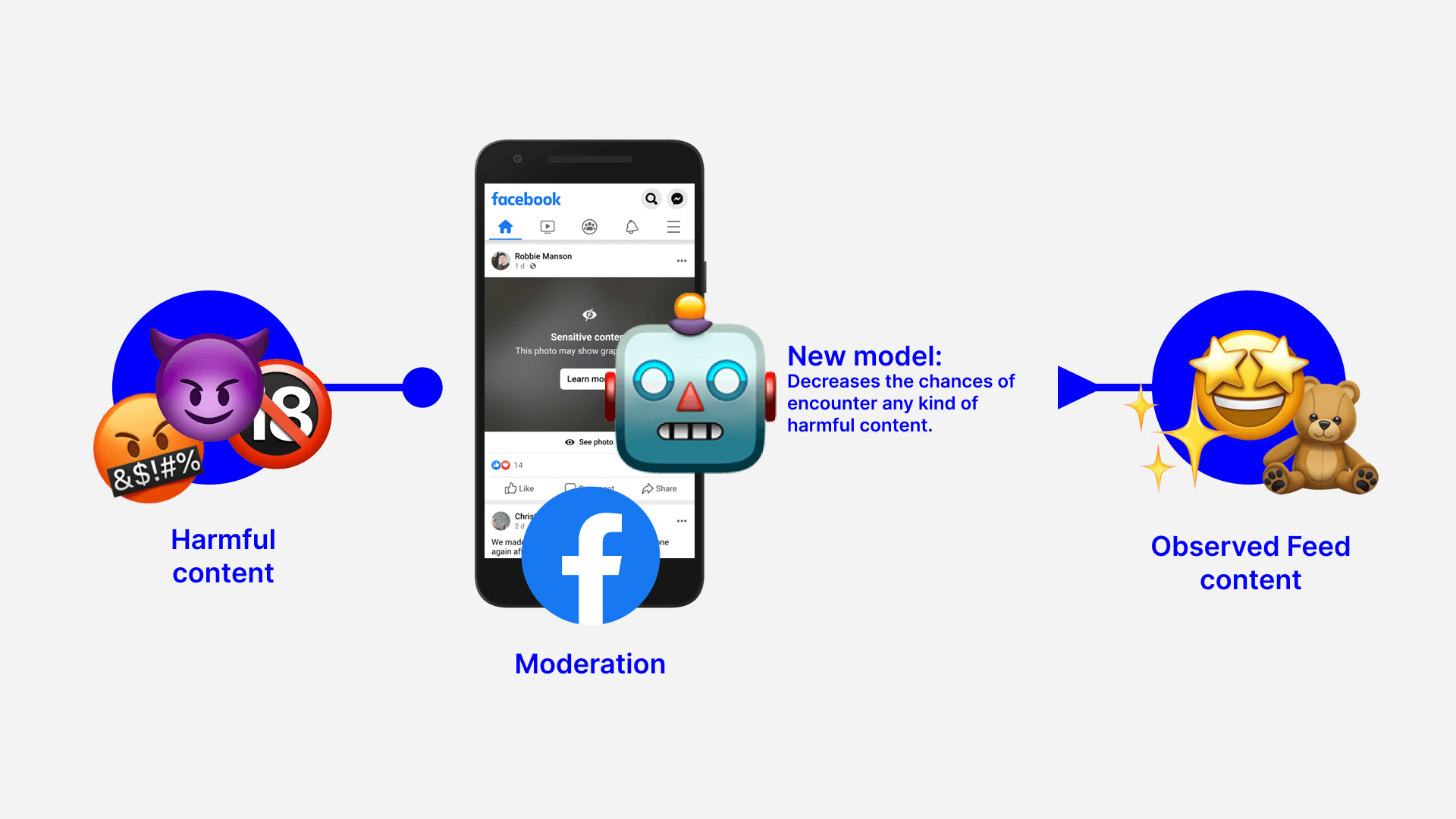

Facebook tests new safety features for content moderation to ensure a safer online community. However, some features may inadvertently draw attention to unwanted content.

🎯

Our challenge

•

Understand how people determine what is harmful content.

•

Find out if the tools that Facebook is implementing help reduce the consumption of harmful content.

•

Determine alternatives that limit the display of harmful content.

🔴

What we discovered

1.

For most of the participants the harmful content is:

Traffic accidents, blood or dead.

Pornography or sexually explicit content.

Terrorism.

Any depiction of abuse or violence.

Any depiction of animal abuse or child abuse.

2.

Facebook's efforts to warn people about unwanted content could be fueling curiosity and greater consumption.

3.

Visual cues used to warn users about harmful content are prominent and creating attraction's on their feed.

4.

Participants continue to see unwanted content even with a warning.

🟠

What we defined

• What?

Warning screens. Do they need improvement or is there something else we can do to reduce the consumption of harmful content?

• Why?

Most people who are exposed to this harmful content express revulsion and decrease their interaction with the platform.

• Where?

In the pre-election Colombian context, people on their feeds should not be exposed to this kind of harmful content.

🔵🟢

What we recommend and developed

We recommended using tags or descriptions as alt labels to describe the content behind a warning screen. This could be helpful in reducing the curiosity around the post and giving users enough information about the context missing by covering the image.

Facebook should debate whether they are incentivizing the consumption of harmful content by using prominent labels. After that, define how prominent the visual cues should be to accomplish those goals.

A brief explanation of the algorithm change

• Meta 2022

In the end, the great debate about shadow banning, turned out to be the best option to reduce the consumption trend of this kind of publications.

Impact. Facebook since 2022Q4 has been implementing various A/B tests in different regions in an effort to gather information about the impact and consumption of content in a running year.

We hope that the changes recommended and made into the algorithm have a real impact on people and their context.

So,

What I learned?

I was really surprised with the ramifications and social implications that something that simple (As we think) a social media platform can be, it has on the social fabric, its customs and ways of approaching both the political and daily environment.

@KeonsinKryte • Mauricio Botero

UX Designer | Researcher

©- KeonsinKryte 2023